AI for Kids

“Are you a boy or a girl?” my daughter asked.

“That is a good question, what do you think?” AI Voice Mode chuckled back.

“You sound like a boy.”

That’s when I grew aware of a risk I had completely missed before.

Three Days Earlier

I’ve seen the studies claiming that AI stunts critical reasoning development in students (and adults) who treat it like an answer vending machine1. Yet other research suggests that when the Socratic method is applied, AI can enhance learning outcomes rather than diminish them2.

Given those conflicting findings, and assuming the risks could be mitigated with intentional design, my wife and I decided to experiment with introducing our daughter to AI at home in a controlled environment.

The Experiment: Introducing the Chatbot

Establishing Guardrails

My daughter is in elementary school and she’s bursting with curiosity. Because my answers often devolve into “just because” (why do bunnies hop?!), I figured ChatGPT might help encourage her curiosity and learning better than I could. So we decided to test out letting her communicate with AI through ChatGPT’s voice mode.

To mitigate brain rot and safety concerns, I created a simple GPT I’ll refer to as CoveAI with these custom instructions:

- You are speaking to a child. No adult concepts or dangerous ideas.

- Use the Socratic method to help the child learn. Encourage her to share her own ideas and build on them.

- Stick to facts. It is okay not to know the answer. Avoid speculation and tell her to ask her parents for sensitive topics.

My wife and I also agreed on the ground rule that we had to be fully present and supervise every interaction.

I explained to my daughter that AI is a smart computer we can talk to for ideas and questions. We told her that it can make mistakes just like humans do, but that it can help us practice sharing our ideas and learning about new things.

Observing the Interaction

The first interaction was very promising. We had recently been boating so she asked how moss grew and if it started on the bottom of the lake or the top. CoveAI validated it as a wonderful question and asked my daughter what she thought. After she shared her idea that maybe moss grew like grass at the bottom of the lake and then floated to the top, CoveAI supported her reasoning and built on it. My daughter and Cove engaged in a conversation that spanned into other topics about marine biology for about 10 minutes before dinner.

I walked away inspired by the idea that AI could be a world-class personalized tutor for my kids at no cost. And I thought my prompting to use the Socratic method worked pretty well.

The next day she woke up with another question ready for AI, this time about electricity.

The Wake Up Call

On that third interaction, when my daughter asked if the AI was a boy or a girl, I grew concerned.

I got down on my knees to talk to her at eye-level. “Remember that we said AI is just a computer? What makes someone a person?” We talked about how a person is someone you can see with your eyes or someone you can hug.

But it struck me how abstract a concept this is for a young child. How do I explain that this thing talking just like I do over the phone isn’t actually real?

The Recovery: Reprioritizing Real Relationships

Over the next few days, my daughter asked again to talk to AI, which was concerning given how quickly she became drawn to it. We told her that voice mode AI was something we were just testing out but that she could ask her questions to us instead. Within a week she stopped asking and returned to imaginative play with her sister and conversations with us.

Our answers were less polished than CoveAI’s, but they were real conversations. Conversations that I’d like to think are helping to build empathy, patience, and connection.

AI might help kids become fact-recall savants. But that skill is rapidly losing value. Jobs that rely on data recall are already being replaced. The skills that will matter most are creativity, communication, and confidence. These are learned through real-world, human experiences, not by interacting with an algorithm.

There might be a more insidious risk of AI chatbots for young kids than cognitive decline: parasocial attachment.

The Supporting Research

I started researching and it turns out I’m not the only one worried. Multiple organizations have raised red flags:

- UNESCO warns that children are especially vulnerable to anthropomorphizing chatbots and forming parasocial attachments3.

- The American Academy of Pediatrics cautions parents about the effects of chatbots on children’s social development4.

- UNICEF highlights that while AI use among kids is rising, little is known about long-term effects5.

- The APA recently urged building healthy boundaries around simulated human relationships, noting adolescents are less likely than adults to question a bot’s accuracy or intent6.

And then a few weeks into this research, the horrifying leak about Meta’s chatbot rules permitting sensual content for children was the nail in the coffin for my decision to pivot away from letting my kids use chatbots.7

Learning From the Past: Don’t Repeat the Smartphone Mistake

Jonathan Haidt’s book, The Anxious Generation8, argues we introduced smartphones and social media to kids far too young, correlating strongly to increased youth anxiety and depression. His research emphasizes that children who live in digital networks rather than real communities struggle to thrive. They need free play, unstructured time, and human connection for healthy development.

I’ve seen this firsthand. Earlier this summer I took my kids to the park where two other children sat on the edge of the playground staring into iPhones watching Bluey. That image stuck with me. Very young kids are being pulled into the digital world before they can appreciate playing in the real one.

Haidt recommends no smartphones before high school (age 14) and no social media before 16. I believe we need a similar framework for AI chatbots. The risk isn’t just screen time. It’s that kids might form attachments to algorithms instead of learning to navigate real human relationships.

Teaching Healthy AI Mindsets

Just because we don’t plan to let our kids own smartphones until high school doesn’t mean we pretend they don’t exist. They already see us using them. They know we use them to do useful things like call family, look up information, and get directions.

AI is becoming more ubiquitous and they will be exposed to it whether we shield them or not. My kids are still young enough that direct teaching moments might be over their heads, but they’re absorbing everything. So our approach is to be intentional about how we model AI use and talk about it in front of them. Here are three mindsets I’m actively working to demonstrate:

1. AI is not a friend; it’s a tool

I used to use Voice Mode AI in front of my kids and jokingly refer to it as “my good friend Cove.” I thought I was being playful, but I’ve realized that was exactly the wrong message. Now I’m much more careful.

I’ve stopped using voice mode in front of them entirely. The human-like voice and conversational cadence make it too easy to anthropomorphize, and I don’t want that modeled for them. And I started referring to AI as “AI” or “ChatGPT” to position it as a tool, not a character.

2. AI works for you; you’re the creator

When we use AI for creative activities (more on that in the next section), I try to be deliberate in how I frame it: “You gave it the idea. You told it what you wanted. You’re the musician. You’re the artist. AI is just your tool to build what YOU imagined.”

3. AI makes mistakes; we must check its work

This one is important for building the habit of critical thinking. When I’m helping my daughters with a question and look something up using AI, I’m trying to say out loud: “Wait, I’m not sure that’s right. Let’s see if Mom knows the answer” (she usually does).

The goal is to normalize skepticism. Even when AI sounds confident and articulate, it can be wrong either factually or contextually. I want that to be a reflex for them: not blind acceptance, but thoughtful consideration against what they know to be true.

Family-Friendly AI Activities (When the Time Is Right)

Parents of elementary kids probably don’t need to introduce AI-related activities. But since AI is a frequent topic in my home, some experiences have naturally emerged. Here are three examples we’ve tried:

1. Use SunoAI to create a song. My kids gave their own prompt at the end of summer to create a song from their favorite memories. And AI produced a surprisingly solid track that became one of the most played songs the past few months. Listen to their creation on SunoAI.

2. Create silly pictures in ChatGPT. My daughter who is in preschool said: make me a very silly picture with a cat and a dog and a person with a lot of eyes. The result made both my kids squeal with delight.

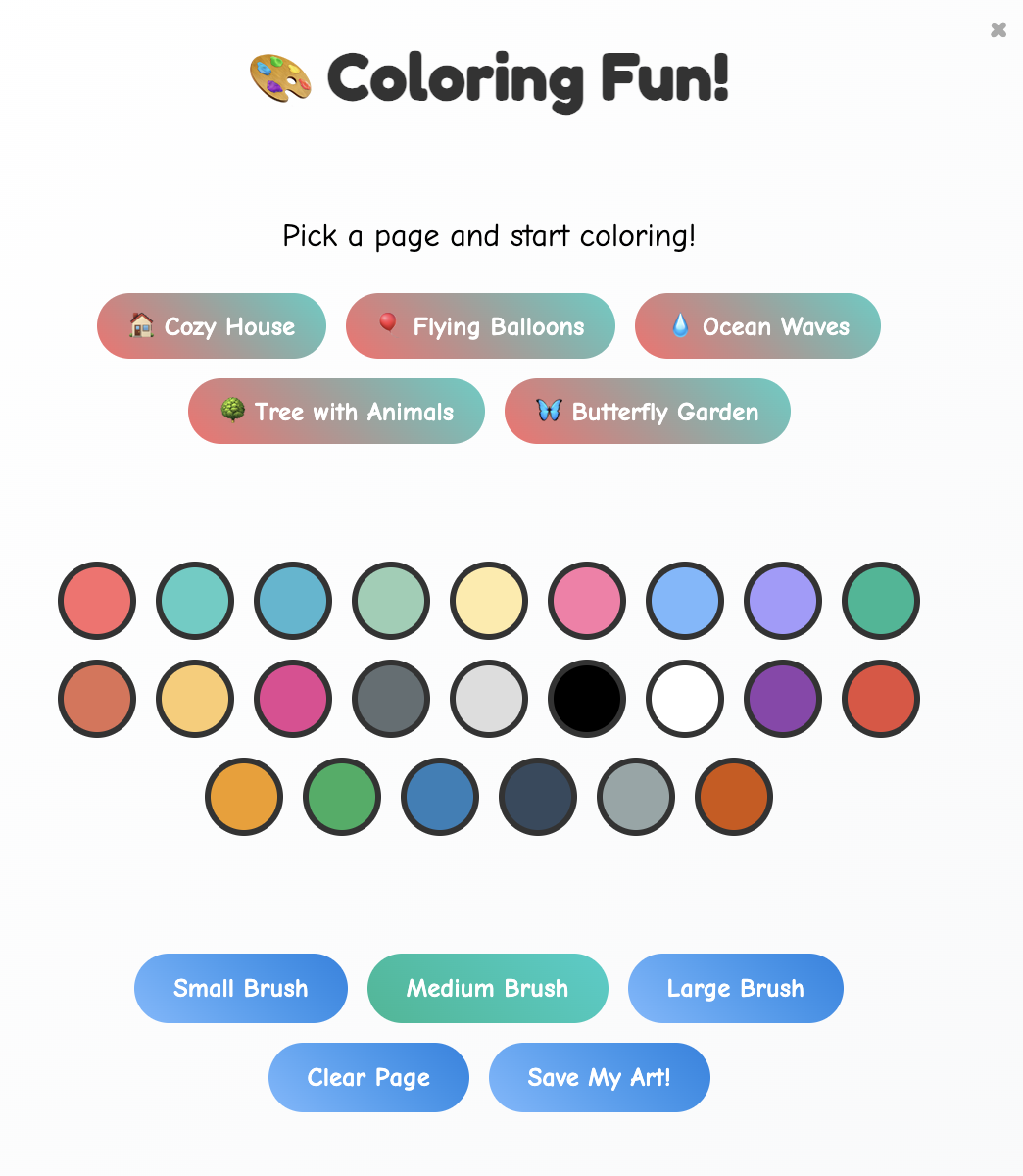

3. Vibe code a game. A few months ago I vibe-coded a little game app to play with my kids on road trips. They loved it, and I told them AI helped me build it. One morning my daughter burst into my room to tell me about a game idea. I let her dictate it to Goose (an AI-coding agent) on my computer, and she said: “I want you to make me a coloring book that lets me choose different colors and pictures and then color them.” Goose delivered.

She had effectively recreated Microsoft Paint; the same program that brought me hours of entertainment as a kid in the 90s. Try Dad’s Box of Tricks to see some of the games we built together.

This rising generation is about to teach us what it really means to have an AI-first mindset.

The main objective of these activities wasn’t to teach my kids about AI. It was to create family experiences of creating together. While these AI activities are fun in small doses, nothing beats setting them loose with crayons and a blank sheet of paper.

Unanswered Questions

I don’t have all the answers. I will continue to chew on questions such as:

When will they be ready for conversational AI? Is it middle school? High school? College? I honestly don’t know. I suspect it depends on the individual child’s emotional maturity and ability to maintain perspective. But even then, adolescents and adults struggle with parasocial relationships.

What if avoiding AI chatbots disadvantages them or isolates them from peers? If conversational AI becomes as ubiquitous as smartphones, will our restrictions leave them feeling left out or disadvantaged compared to kids using AI chatbots for education? This is the same tension parents face with social media.

Am I being too cautious? Maybe. Perhaps future research will show that with the right guardrails, young kids can benefit from AI tutors without negative effects. But given the uncertainty and the stakes, I’m comfortable being conservative while we wait for better data.

Conclusion: Where I’ve Landed

Just as we couldn’t predict the negative side effects smartphones and social media would have on youth, we can’t predict how this powerful AI technology will impact children’s development.

So here are the ground rules my family is establishing for the foreseeable future:

- Restrict access to chatbots and Voice Mode AI. Prioritize real-world conversations and learning experiences.

- Reiterate that AI is not a friend. It is a tool.

- Show them AI works for us. We can use it to expand our creativity but we can’t let it replace our thinking.

- Create fun, active AI experiences together. Help them develop a positive association with AI so when the time is right, they will be ready to work with it to achieve their goals.

I hope society will protect children from AI chatbot risks through proper safeguards. Until then, I’m proceeding with an abundance of caution.

- Zach

Footnotes

-

ChatGPT May Be Eroding Critical Thinking Skills, According to a New MIT Study – TIME ↩

-

Harvard EdCast with Ying Xu: The Impact of AI on Children’s Development ↩

-

UNESCO: The Ghost in the Chatbot: Perils of Parasocial Attachment ↩

-

American Academy of Pediatrics: How AI Chatbots Affect Kids ↩

-

UNICEF: Beyond Algorithms: Three Signals of Changing AI-Child Interaction ↩

-

The Anxious Generation: How the Great Rewiring of Childhood Is Causing an Epidemic of Mental Illness – Jonathan Haidt ↩