Doug's Roses: The Ethical Complexity of Using AI

Doug’s Roses

In The Good Place (Season 3, Episode 10), there’s a scene that captures the moral complexity of living in a modern, interconnected world.

Michael visits the Accounting Department in the afterlife and compares two men who performed the same action, centuries apart:

Why did Doug lose points? Michael explains that because of the complexity of the modern globalized world, the simple act of buying flowers was tied to a web of unethical systems:

- He ordered the roses using a cell phone made in a sweatshop.

- The flowers were grown with toxic pesticides.

- They were picked by exploited migrant workers.

- The delivery truck contributed to global warming.

- The money he paid went to a billionaire CEO who used it to perpetuate harassment.

The message? Nobody has gotten into the Good Place in over 500 years. Not because humans became worse, but because the world became so interconnected that even well-intentioned actions carry invisible ethical weight.

I can’t help but wonder how my own recent AI experiment might score on this same hypothetical ledger.

- The AI was trained on images scraped without artist consent.

- AI data centers consumed thousands of gallons of water, depleting natural resources.

- An illustrator might have gotten the job if AI didn’t exist.

- The evangelizing of AI automation threatens to displace millions of workers.

This is the kind of ethical complexity I keep bumping into with AI. And I’ve started to feel the weight of enthusiastically supporting something that many people find deeply concerning.

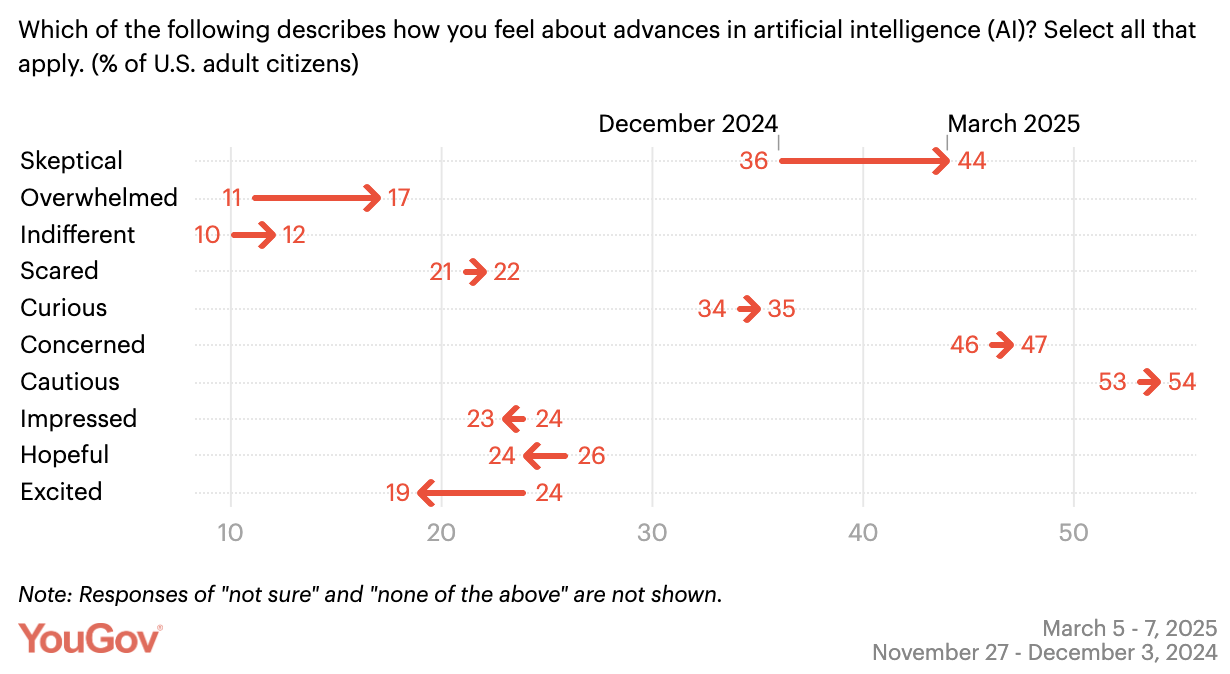

American Skepticism Is Climbing

A recent YouGov survey found that Americans are increasingly skeptical about AI. 40% now believe AI will have a negative effect on society, up from 34% just a few months earlier. The share of people feeling “skeptical” about AI jumped from 36% to 44%.

I continue to be amazed by how quickly AI is progressing and delivering value, but I’m also aware that my enthusiasm isn’t universally shared. A significant portion of the population sees AI as a threat, not a tool.

That tension prompted me to think more carefully about how I engage with and talk about AI. So I decided to dive into the research on the concerns I have that tend to align with the criticisms I hear from others. I’ve organized my thinking into three areas: ethics, environment, and job displacement.

The Ethical Concerns

One of my favorite courses at my university was Business Ethics. It’s what got me hooked on Marcus Aurelius and sparked a habit of overthinking things. One of the ethical frameworks that resonated with me was Kant’s categorical imperative: act only according to rules you could will to become universal law.

That framework became my guide when I faced a series of decisions about the storybook we created. (Check out my blog on AI for image generation for a recap on how my partner and I used AI to illustrate a children’s book about our family’s Dutch heritage).

The Transparency Question

When we used Gemini to create the illustrations, every AI-generated image had a small watermark in the corner. My partner and I debated cropping it out to make the book look more polished.

But I kept coming back to Kant. If everyone removed AI watermarks, we’d lose the ability to distinguish synthetic content from reality. And that’s not a hypothetical concern. According to the same YouGov study, “the spread of misleading video and audio deepfakes” is the number one concern Americans have about AI. As these tools become more sophisticated, the line between real and generated content is getting harder to see.

We decided to keep the watermark. The AI watermark is a mark of honesty. If AI-generated content is clearly labeled, it allows human-created art to be valued more highly.

I’m not naive. People are going to see AI as a shortcut for creating value, and some won’t care about transparency at all. But fortunately, enforcement is coming. The California Transparency Act (SB-942) just went into effect on January 1, 2026, requiring clear labeling of AI-generated content. Normalizing the watermark and supporting regulation like this may help reduce the deepfake risk over time.

The Commercialization Question

Our original motive was simple: gift the book to our kids and my grandmother. But then some of my extended family was interested in getting a copy. I considered listing the book on Amazon so people could order directly. Simple logistics, right?

But the question kept nagging at me: just because I can, does that mean I should?

The more I thought about it, the more uncomfortable I became. AI image models are trained on datasets that likely include copyrighted works scraped without artist consent. Commercializing something that I didn’t really have creative ownership over didn’t sit right.

So I ran it through Kant’s test: would I be okay if everyone commercialized AI-generated content? My answer was no. I don’t want my Amazon feed overtaken by synthetic content. If I’m not willing to accept that future, I shouldn’t contribute to it.

We decided to keep it personal by just printing copies for our kids, my parents, and my grandmother.

The Distribution Question

That decision led me to think more broadly about where AI content belongs.

I find AI-generated content to be a huge unlock personally. I lack the artistic skills to bring my ideas to life, but AI lets me do that. I’ve enjoyed creating music with my own lyrics using Suno.ai. These creations bring personal enjoyment, but I don’t expect or need the world to appreciate them.

My hope is that we can keep AI in its lane. I’ll continue using Suno.ai to create music, but I won’t upload AI-generated songs to Spotify. I don’t want synthetic content drowning out human musicians. The same applies to Amazon, art galleries, and TV streaming services.

AI is a powerful tool for personal creation. But human platforms should stay human.

The Environmental Concerns

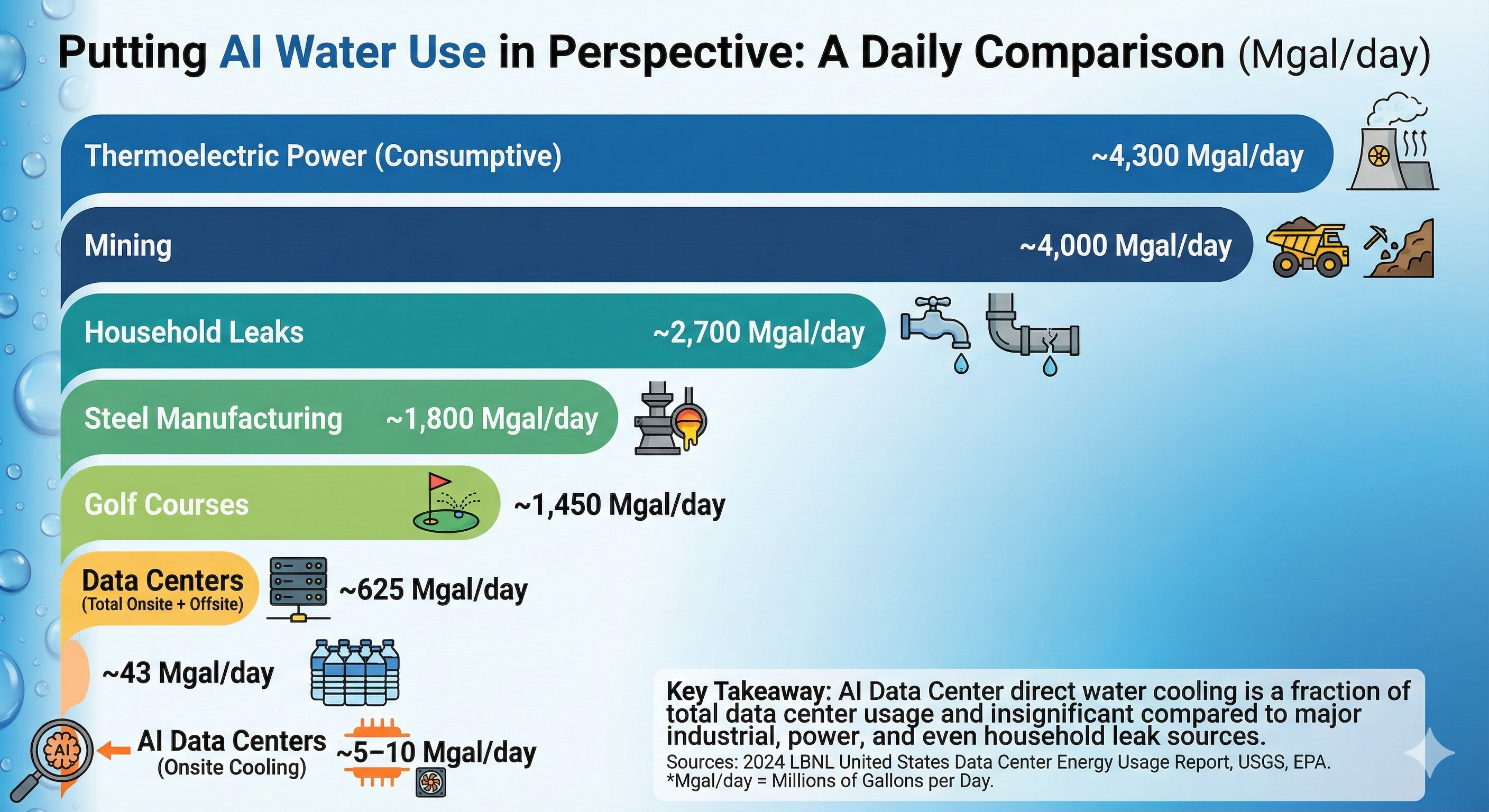

When I bring up AI in casual conversations with friends or family, one of the most common pushbacks I hear is about the environment. “I don’t use AI because it’s destroying the planet. Have you seen how much water data centers consume?”

For a while, I didn’t have a great response. It felt like a legitimate concern, so I did some research.

Frankly, I think these concerns are overblown.

According to the 2024 United States Data Center Energy Usage Report from Lawrence Berkeley National Laboratory, total water usage from all US data centers ranges from a high estimate of 628 million gallons per day to around 50 million for cooling alone. AI workloads represent roughly a tenth of all data center usage. And much of that cooling water is recycled, not evaporated.

To put this in perspective:

Data source: Lawrence Berkeley National Laboratory

Data source: Lawrence Berkeley National Laboratory

As Andy Masley points out in his analysis “The AI Water Issue is Fake”, AI data centers use less water than golf courses. Less than household leaks. Less than mining, livestock, and steel production. It’s not nothing, but it’s also not the environmental catastrophe the headlines suggest.

I care about climate. I think it’s worth monitoring AI’s environmental footprint as the technology scales. But if we’re genuinely worried about resource depletion, there are bigger fish to fry. Converting golf courses to turf would save more water than eliminating AI entirely.

And here’s the counterargument that gives me hope: AI might actually help us solve climate problems faster. Researchers are using AI to optimize energy grids, accelerate materials science for batteries and solar panels, and model climate patterns with unprecedented accuracy. DeepMind’s AI reduced Google’s data center cooling energy by 40%. The technology that consumes resources might also be the technology that helps us consume fewer of them.

The Job Displacement Concerns

Of the three concerns, this is the one that weighs on me most.

When I think about illustrators, I feel this tension acutely. Pre-AI, we might have considered hiring someone to illustrate our book. That’s theoretically income a creative professional won’t receive because we used a tool instead. Multiply that across millions of similar decisions, and you can see why the creative community is anxious.

I’ve made a career out of automating business processes. I’ve always found satisfaction in outsourcing tedious manual work to a computer program so I can focus on more interesting, strategic tasks. But now AI is allowing me to automate away entire categories of skilled work. That feels different.

A mental model called Creative Destruction helps me manage this concern. The economist Joseph Schumpeter argued that technological progress destroys some jobs while creating others. Historically, this has held true. ATMs didn’t eliminate bank tellers; the number actually increased because banks could open more branches at lower cost. A McKinsey study projects that while AI could displace 92 million jobs by 2030, it could also create 170 million new ones. And most AI impact falls on the augmentation side of the spectrum: enhancing human productivity rather than replacing workers entirely.

We saw similar patterns with the rise of e-commerce. Despite campaigns to shop local, many wonderful mom-and-pop businesses dried up as eBay and Amazon took their customers. We lost something real: thoughtfully decorated window displays replaced by algorithms and sponsored ads. But I don’t want to go back to a world where I have to drive to the store for every purchase. Online shopping gives me back time to spend doing things I enjoy.

That’s not to say the transition wasn’t painful for many business owners and I expect the AI revolution to be painful for many workers, especially creative professionals. But rather than trying to resist the inevitable tide or downplay my experience with AI, I can help others adapt and realize they can use AI to amplify their skills rather than fearing AI will displace them. I can also seek to support local artists and policies to support displaced workers.

Here’s the other reality: we would have never paid an illustrator several thousand dollars to create this book that we were planning to only print a few copies of. AI enabled a personalized creation that simply wasn’t possible given our constraints. The technology unlocks new possibilities rather than just replacing old ones.

The Underlying Bet

All three of these concerns share a common thread: they assume AI advancement comes at a cost. And that’s true. But they miss the other side of the ledger.

If history is any guide, technological advancement lifts all boats.

AI promises to help cure devastating diseases, address climate challenges, and solve problems we haven’t yet imagined. The potential upside is too significant to abandon because of growing pains.

My optimism is grounded in a book I read several years ago by Steven Pinker called Enlightenment Now: The Case for Reason, Science, Humanism, and Progress. Pinker paints a compelling case that technological advancement improves quality of life for humanity at large. One of the most striking charts: in 1820, 90% of the world lived in extreme poverty. By 2015, less than 10% did. (Watch Pinker present this data)

That belief is why I continue to use and evangelize AI despite the tradeoffs. The question for me isn’t whether to embrace it, but how to do so responsibly.

Conclusion

I started this blog to document my experiments with AI. This essay was the culmination of thoughts and conversations I’ve been having with friends and family that I finally put down on paper to ground my thinking.

Both things can be true: I’m excited about what AI enables, and I understand why others are skeptical. My opinion might differ if I were a creative professional who felt this tool threatened my identity. And I plan to hold space for those conversations and acknowledge that the transition may be painful for many. But I take the long-term view that AI advancement will grow the economic pie and lift all boats eventually.

Here are the mental models I’m using to manage the cognitive dissonance:

- Use AI with integrity. Only in ways you would will to become universal law. Be transparent about origins and normalize the AI watermark.

- Stay grounded in evidence. Environmental costs are worth watching, but let data guide your concern, not headlines.

- Help others adapt. Technological change has always created new opportunities. Encourage people to use AI to amplify their skills rather than fear displacement.

Just like Doug couldn’t escape the interconnected consequences of buying roses, we can’t escape the tradeoffs that come from using AI. But we can still make choices that reflect our values, even when those choices are imperfect.

– Zach

Sources

- Andy Masley: The AI Water Issue is Fake

- Artnet: Class Action Lawsuit Against AI Image Generators

- California Legislature: SB-942 California AI Transparency Act

- DeepMind: AI Reduces Data Center Cooling Energy by 40%

- Lawrence Berkeley National Laboratory: 2024 United States Data Center Energy Usage Report

- Marcus Aurelius, Meditations

- McKinsey Global Institute: Generative AI and the Future of Work in America

- Stanford Encyclopedia of Philosophy: Kant’s Moral Philosophy

- Stanford HAI: 2025 AI Index Report

- Steven Pinker, Enlightenment Now: The Case for Reason, Science, Humanism, and Progress

- The Guardian: It’s the Opposite of Art: Why Illustrators Are Furious About AI

- University of Cambridge: AI in the UK Civil Service

- YouGov: Americans Are Increasingly Skeptical About AI’s Effects